mirror of

https://github.com/Fancy-MLLM/R1-Onevision.git

synced 2026-01-13 23:07:18 +08:00

Update README.md

This commit is contained in:

parent

973f5ea81c

commit

d1ee0fdcc2

@ -1,4 +1,4 @@

|

||||

|

||||

![LOGO]()

|

||||

|

||||

<b>R1-Onevision:An Open-Source Multimodal Large Language Model Capable of Deep Reasoning. </b>

|

||||

|

||||

@ -6,5 +6,7 @@

|

||||

<a href="https://huggingface.co/datasets/Fancy-MLLM/R1-OneVision-Bench">🤗 Reasoning Benchmark</a> •

|

||||

<a href="https://huggingface.co/Fancy-MLLM/R1-OneVision-7B">🤗 Model weights</a> •

|

||||

<a href="https://huggingface.co/spaces/Fancy-MLLM/R1-OneVision">🤗 Demo</a> •

|

||||

<a href="https://www.arxiv.org/abs/2407.11691">📝 Report</a>

|

||||

<a href="">📝 Report</a>

|

||||

</div>

|

||||

|

||||

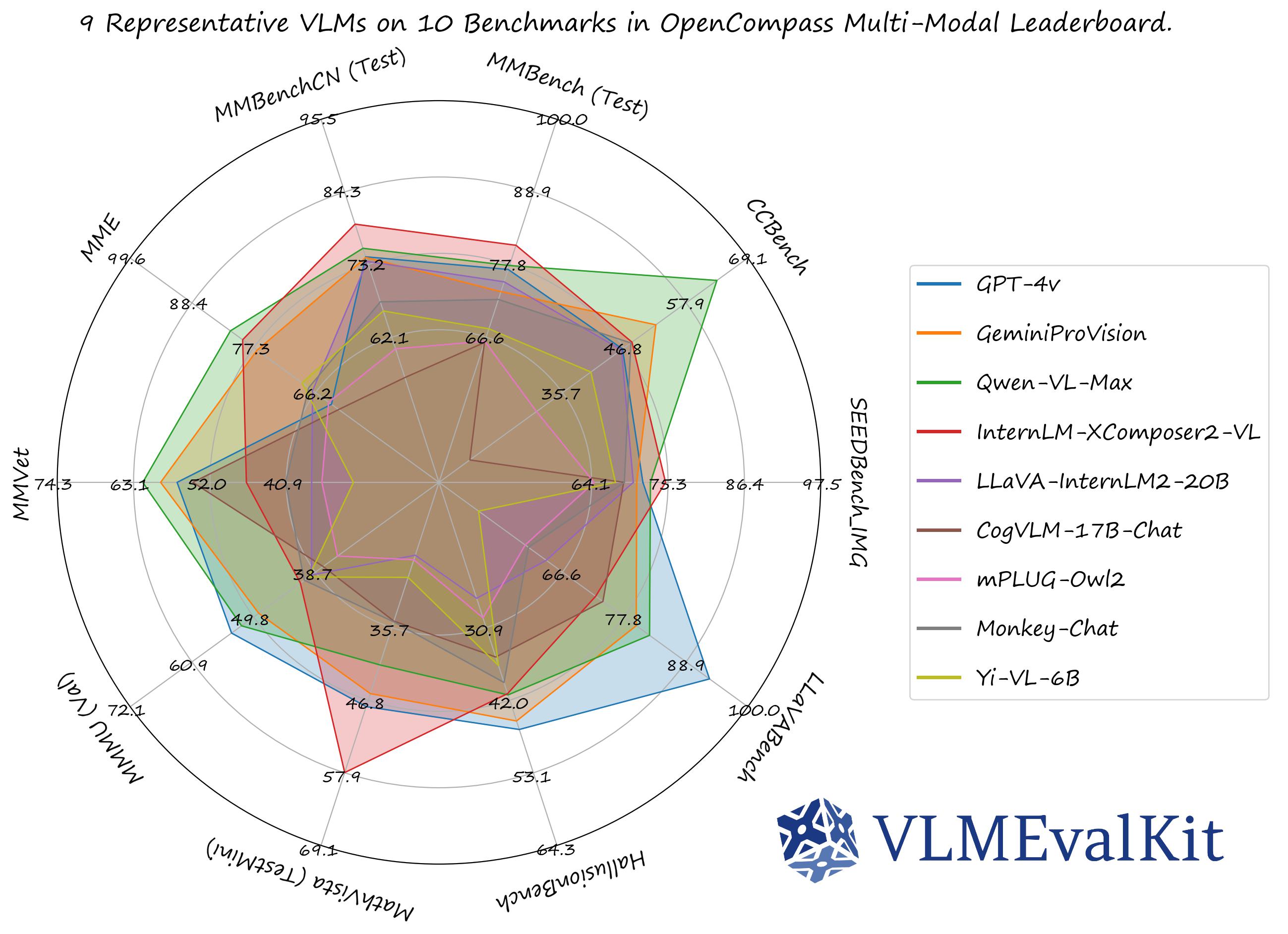

**R1-OneVision** is a versatile **multimodal reasoning large model**, designed to tackle complex visual reasoning tasks. It seamlessly integrates visual and textual data to offer precise interpretations of multimodal information, excelling in areas such as mathematics, science, deep image understanding, and logical reasoning. With its robust ability to perform multimodal reasoning, **R1-OneVision emerges as a powerful AI assistant capable of addressing a wide range of problem-solving challenges across different domains**.

|

||||

|

||||

Loading…

Reference in New Issue

Block a user